Alpha-Beta Pruning

Two modifications are made to the agent: the minimax algorithm is optimized with alpha-beta pruning and complexity is added to the heuristic.

Alpha-Beta pruning algorithm keeps track of two values - alpha and beta - at every node. The alpha value corresponds to the evaluation for the agent and the beta value corresponds to the evaluation for the opponent.

If at any node alpha becomes greater than beta, all the nodes below it are pruned since they cannot possibly affect the overall evaluation - because the algorithm always tries to maximize alpha starting from a very low value, and minimize beta starting from a very high value.

Six new components are added to the heuristic - number of times pieces occur thrice in a row with base covered, number of times pieces occur twice in a row with base covered, number of times pieces occur twice in a row with base partially covered for both the agent and the opponent.

The original components now count the number of times pieces occur in a row without base being covered at all.

Function to apply alpha-beta pruning:

def alphabeta(node, depth, maximizingPlayer, mark, config, alpha=-np.inf, beta=np.inf):

if depth == 0 or is_terminal_node(node, config):

return get_heuristic(node, mark, config)

valid_moves = [c for c in range(config.columns) if node[0][c] == 0]

if maximizingPlayer:

for col in valid_moves:

child = drop_piece(node, col, mark, config)

alpha = max(alpha, alphabeta(child, depth - 1, False, mark, config, alpha, beta))

if alpha >= beta:

return beta

return alpha

else:

for col in valid_moves:

child = drop_piece(node, col, 3 - mark, config)

beta = min(beta, alphabeta(child, depth - 1, True, mark, config, alpha, beta))

if alpha >= beta:

return alpha

return beta

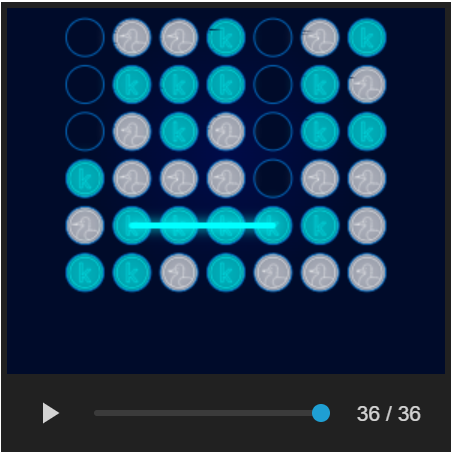

Here is a snapshot of the agent playing against itself:

The game lasted 36 moves.